Ryan Hu (10) | STAFF REPORTER

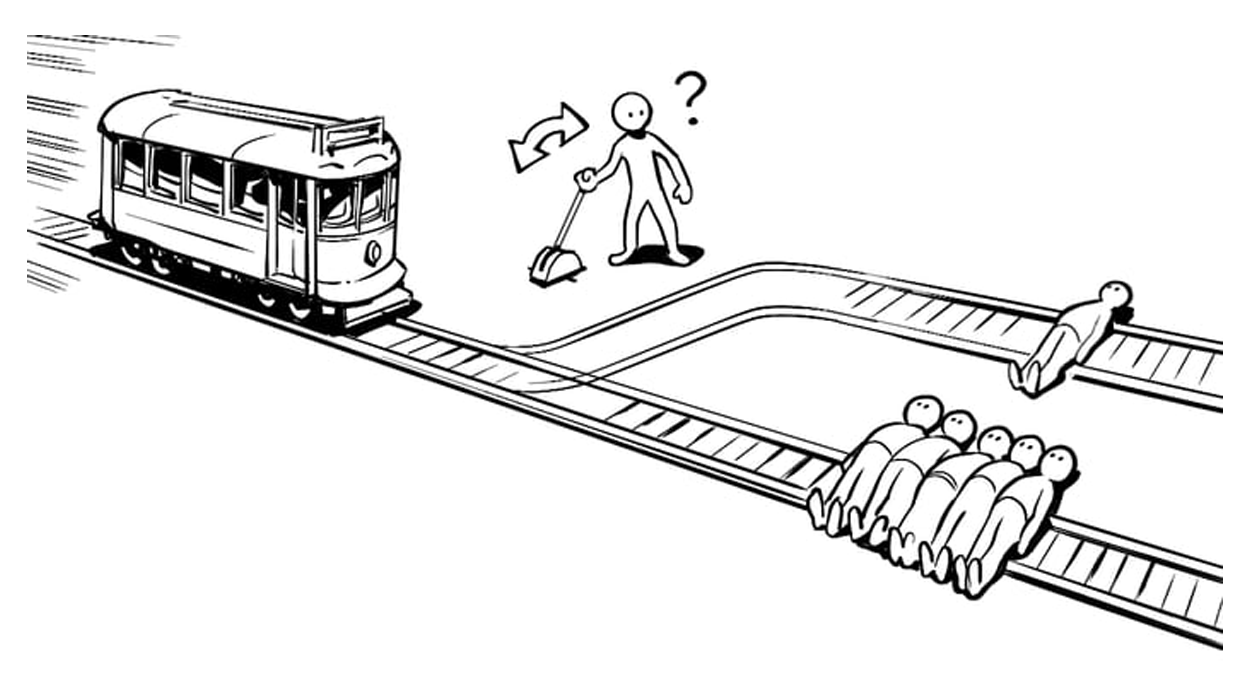

A runaway trolley is barreling down the railway. Further down the railway, there are five people tied up and left on the tracks. As a railway operator you are able to divert the path of the train, but doing so will kill a person standing on the side tracks.

This thought experiment was first devised in 1967 as part of the debate on abortion, but has since become one of the most famous examples of its type, becoming a staple of ethics and philosophy. At its core, the ethical decision this theoretical railway operator must make is whether to sacrifice an otherwise safe person to save five people in danger. Similar thought experiments have also been devised using crashing planes, young and old pedestrians, bridges, and runaway freight trucks. While the original dilemma has been criticised for being unrealistic, similar ethical dilemmas have occurred in the past, and by examining these situations, it is possible to shed light on human behaviour.

One of the biggest “trolley dilemmas” in recent memory was the Chernobyl Disaster of 1986, in which one of the reactors of the Chernobyl Nuclear Power Plant melted down, creating an Exclusion Zone covering an area of approximately 2,600 km2. 135,000 people had to be evacuated, including 50,000 from the now-ghost town of Pripyat. The wind conditions of the time were blowing radioactive isotopes from the Plant towards Moscow, the capital and most populous city of the at-the-time Soviet Union. Instead of allowing the radioactive isotopes travel to Moscow, the Soviet Union chose to create rain clouds that would move the isotopes into neighbouring Belarus. An estimated 60% of the radioactive contamination created by the accident fell on Belarus.

A more modern example of the trolley dilemma is rooted in autonomous cars. In the scenario that a crash is inevitable, the car must be programmed to decide who to sacrifice, and whether or not the driver and passengers should be affected. A study done at MIT revealed that most people preferred to save pregnant women, children, athletes, and high-status individuals over their counterparts. Whether or not programmers should cater to the public’s preference is one issue, but in a consumerist society, they likely would.

If autonomous cars are ever to be programmed with killing preferences, an understanding of collective values are to be found. In 2016, a study where the participants were to electrocute one mouse to save five mice showed that 84% chose to electrocute the one mouse, reasoning that it would cause less harm. However, a subsequent study asking which option the participants think they would choose showed that 66% would simply do nothing.

The trolley dilemma reveals an interesting facet of human behaviour. Difficult decisions involving the lives of others can often lead people to keep their hands clean, but when faced with a real-life scenario, instinct dictates that it is more ethical to save more lives. At its core, the trolley problem presents an issue of arithmetics. When the radioactive cloud formed by Chernobyl was moving towards the populous Moscow, Soviet officials looked at the maths and chose to divert the cloud towards the relatively unpopulated Belarus. However, studies have shown that when pregnant women, children and loved ones are laid on the tracks, these results may differ. Similarly, the nature of Moscow being the prestigious capital of the authoritarian Soviet Union must be considered. The message? Moral decisions are never clear-cut.